-

chevron_right

chevron_right

Google’s DeepMind finds 2.2M crystal structures in materials science win

news.movim.eu / ArsTechnica · Wednesday, 29 November - 18:42

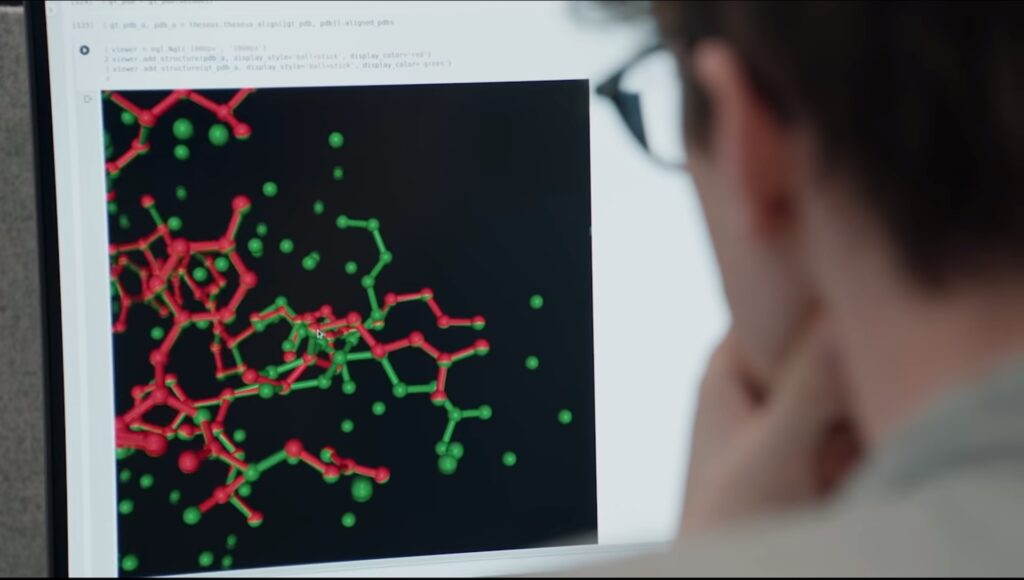

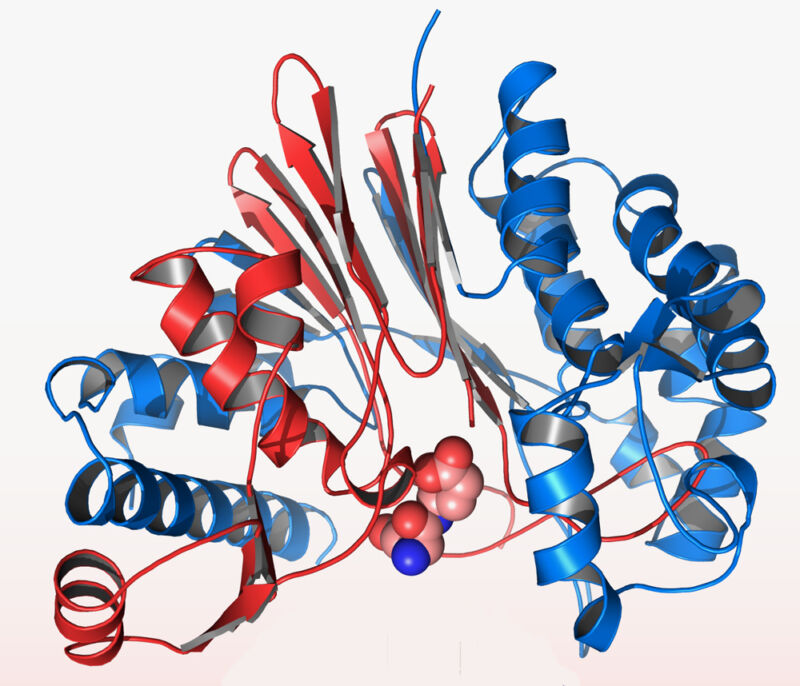

Enlarge / The researchers identified novel materials by using machine learning to first generate candidate structures and then gauge their likely stability. (credit: Marilyn Sargent/Berkeley Lab)

Google DeepMind researchers have discovered 2.2 million crystal structures that open potential progress in fields from renewable energy to advanced computation, and show the power of artificial intelligence to discover novel materials.

The trove of theoretically stable but experimentally unrealized combinations identified using an AI tool known as GNoME is more than 45 times larger than the number of such substances unearthed in the history of science, according to a paper published in Nature on Wednesday.

The researchers plan to make 381,000 of the most promising structures available to fellow scientists to make and test their viability in fields from solar cells to superconductors. The venture underscores how harnessing AI can shortcut years of experimental graft—and potentially deliver improved products and processes.