-

chevron_right

chevron_right

Review: AMD’s Radeon RX 7700 XT and 7800 XT are almost great

news.movim.eu / ArsTechnica · Wednesday, 6 September, 2023 - 13:00

Enlarge / AMD's Radeon RX 7800 XT. (credit: Andrew Cunningham)

Nearly a year ago, Nvidia kicked off this GPU generation with its GeForce RTX 4090 . The 4090 offers unparalleled performance but at an unparalleled price of $1,600 (prices have not fallen). It's not for everybody, but it's a nice halo card that shows what the Ada Lovelace architecture is capable of. Fine, I guess.

The RTX 4080 soon followed, along with AMD's Radeon RX 7900 XTX and XT . These cards also generally offered better performance than anything you could get from a previous-generation GPU, but at still-too-high-for-most-people prices that ranged from between $900 and $1,200 (though all of those prices have fallen by a bit). Fine, I guess.

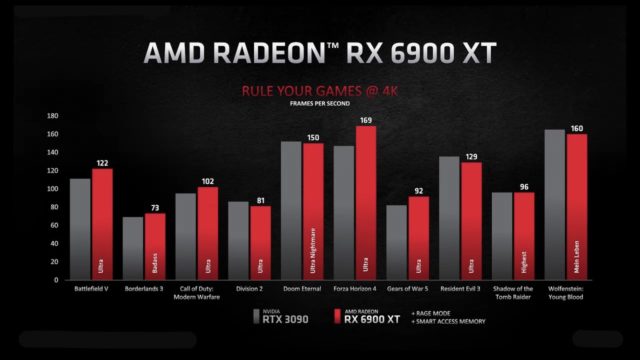

By the time we got the 4070 Ti launch in May, we were getting down to the level of performance that had been available from previous-generation cards. These GPUs offered a decent generational jump over their predecessors (the 4070 Ti performs kind of like a 3090, and the 4070 performs kind of like a 3080). But those cards also got big price bumps that took them closer to the pricing levels of the last-gen cards they performed like. Fine, I guess.