Enlarge

/

An artist's interpretation of what ChatGPT might look like if embodied in the form of a robot toy blowing out a birthday candle. (credit: Aurich Lawson | Getty Images)

One year ago today, on November 30, 2022, OpenAI released

ChatGPT

. It's uncommon for a single tech product to create as much global impact as ChatGPT in just one year.

Imagine a computer that can talk to you. Nothing new, right? Those have been around

since the 1960s

. But ChatGPT, the application that first bought

large language models

(LLMs) to a wide audience, felt different. It could compose poetry, seemingly understand the context of your questions and your conversation, and help you solve problems. Within a few months, it became the

fastest-growing

consumer application of all time. And it created a frenzy.

During these 365 days, ChatGPT has broadened the public perception of AI, captured imaginations,

attracted critics

, and stoked existential angst. It

emboldened

and

reoriented

Microsoft,

made Google dance

, spurred

fears of AGI

taking over the world, captivated

world leaders

, prompted attempts at

government regulation

, helped add

words to dictionaries

, inspired

conferences

and

copycats

, led to a

crisis

for educators, hyper-charged

automated defamation

, embarrassed

lawyers

by hallucinating,

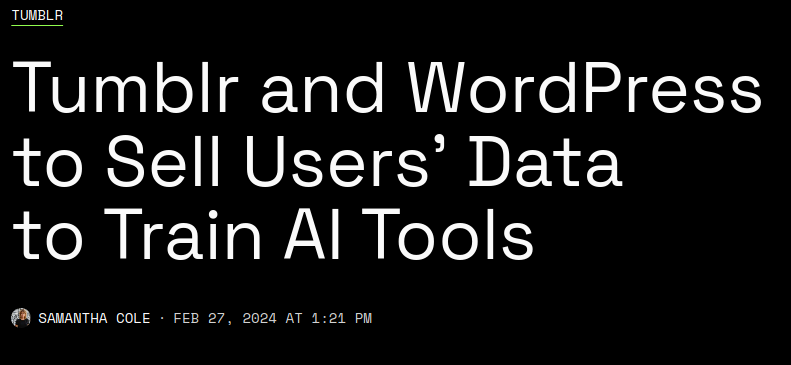

prompted lawsuits

over training data, and much more.

chevron_right

chevron_right