-

chevron_right

chevron_right

Neural implant lets paralyzed person type by imagining writing

John Timmer · news.movim.eu / ArsTechnica · Wednesday, 12 May, 2021 - 17:03

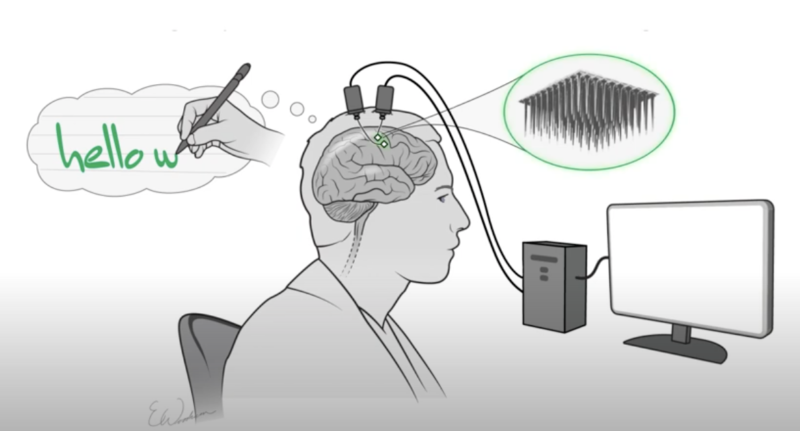

Enlarge / An artist's schematic of the system. (credit: Nature)

Elon Musk's Neuralink has been making waves on the technology side of neural implants, but it hasn't yet shown how we might actually use implants. For now, demonstrating the promise of implants remains in the hands of the academic community.

This week, the academic community provided a rather impressive example of the promise of neural implants. Using an implant, a paralyzed individual managed to type out roughly 90 characters per minute simply by imagining that he was writing those characters out by hand.

Dreaming is doing

Previous attempts at providing typing capabilities to paralyzed people via implants have involved giving subjects a virtual keyboard and letting them maneuver a cursor with their mind. The process is effective but slow, and it requires the user's full attention, as the subject has to track the progress of the cursor and determine when to perform the equivalent of a key press. It also requires the user to spend the time to learn how to control the system.